Let me introduce you to the newest member of the GxP family: machine learning, or GMLP.

What’s machine learning?

Machine learning is when computer algorithms improve automatically through experience and using data. It’s why I trust Waze. This car navigation app uses the data of all Wazers around and tells me what today’s best route is, taking congestion into account.

“GxP” is a general abbreviation for “good practice” quality guidelines and regulations. The “x” stands for the various fields, for example, good manufacturing practices, or GMP. So good machine learning practices is a set of good practices when implementing machine learning.

Why does the FDA care?

In life sciences, as well, this technique is used, for instance, to automate the manufacturing process or for diagnostic tools. Here’s an example. Imagine you produce inhalers for asthma patients. To make sure your final product delivers the right doses into the lungs, you take pictures of every piece of the inhaler along the production process. You could have colleagues look at those pictures, or you could teach a machine to automatically differentiate the good from the bad parts, making sure only the best quality reaches the patient.

Another example is your wearable or even implantable device that picks up measurements and tells you what to do: go on, call a doctor or “hang on, I’m calling an ambulance.” In both examples, you’ve taught a machine to take a decision: either on the quality of the inhaler parts or your own condition. Some maths are run, and a decision is returned. These maths are often referred to as a “model.” How can you be sure your machine is well taught? In come the good machine learning practices.

OK, so what is it about?

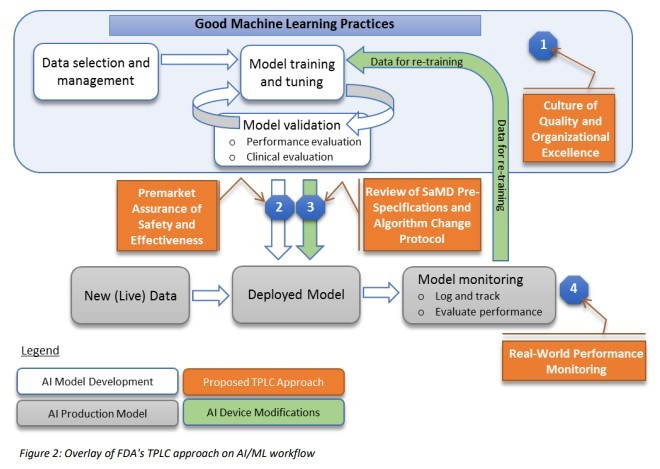

Unfortunately, GMLP is more than having a battery of Data Scientists create incredibly reliable models. In Figure 2 of the FDA’s “Proposed Regulatory Framework for Modifications to Artificial Intelligence/Machine Learning (AI/ML),” the data scientist's work is limited to the white boxes: data selection, model training and model validation.

The IT department will often be asked to deploy the model. “Deploy” in our examples means:

- Making sure the model is utilized by the machine that looks at the inhaler parts.

- Making sure that the wearable runs the validated model when it generates its advice.

We’re not done yet. So far, your data scientists have created a state-of-the-art model, and your beloved IT staff has made sure it actually runs. GMLP will now ask you to keep a close eye on the performance of your model and adjust it with what you learn. But what does it mean to keep a close eye on it? To stick to the Waze analogy: When you inform Waze that you’ve hit a roadblock, it will use that information to calculate new routes to your fellow Waze users. So “keeping a close eye” in our examples could mean:

- Still having samples of inhaler parts looked at manually. This allows you to confirm the model’s performance.

- Offer the user the opportunity to undo any action undertaken automatically by the wearable or implantable device.

Every check you make will confirm the model is running well or will tell you that you need to send your model back to school, back to your data scientists. This loop, in which a deployed model is checked against reality and sent back to your data scientist, is called “model operations” or ModelOps.

How do we do it?

OK, so we get it: We have to keep an eye on our model when it’s out in the wild. And when it starts acting iffy, we call it in and create a new model. Got it. But how?

There’s a multitude of tools out there: MLflow, Pachyderm, Kubeflow, DataRobot, Algorithmia, TensorBoard, etc. These all have one thing in common: They are not built for dummies. They are built for smart data scientists with coding skills, not for normal people like you and me.

Does it have to be hard?

Must it be that hard to govern your models? No, it mustn’t. Let’s have a look at what the FDA uses itself. Let’s take the hint from this press release.

A couple of clicks later and you stumble upon a tool called “Model Manager.” I know: not a very creative name. It is what it is. OK, beloved dummies – your turn now. Check out this movie by my colleague Alex and you will know what to do:

- Tell the bright people in your company, whether they use Python, R, Tensorflow or any other smart people’s tool, to push their model into your Model Manager. Have them look at the movie; they’ll get it.

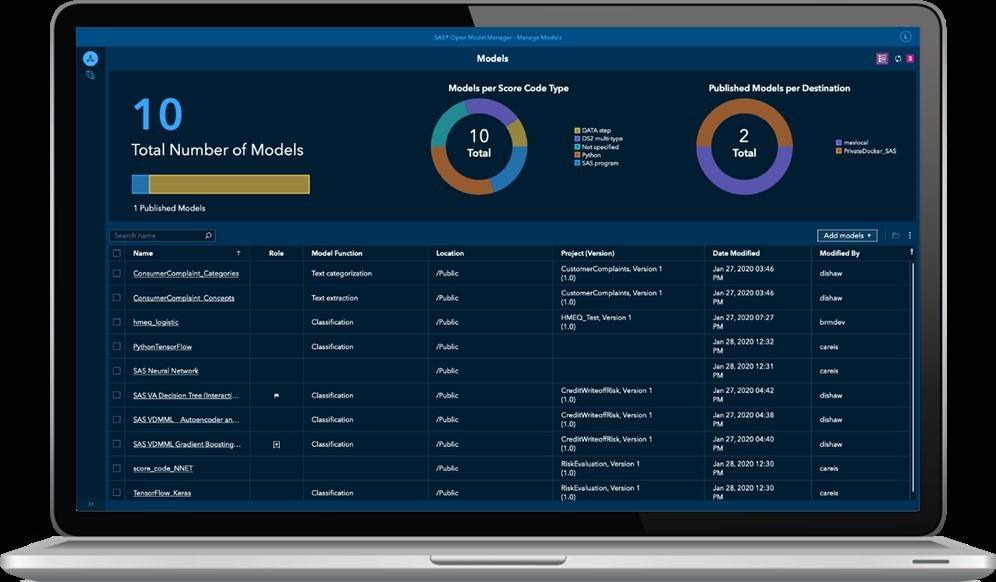

- You’re in control now. No need to code anything. In the dashboard, also called “model registry,” you can pull up the model uploaded by your smart colleagues.

This is a dummy's dashboard. Every line is a group of models, and the pie chart in the center tells you how many models you manage. The pie chart on the right tells you how many models are deployed.

- FDA asks you to test your model before you deploy it. So test it: Click on the test button and throw some random data at it. Don’t deploy it if “computer says no.”

- Deploy it. It’s right-click > deploy. I can’t make it more difficult than that.

- Now have your bright people push back the feedback data into the Model Manager.

- Check your model performance in the tab “performance”. This is where you see whether your model starts acting iffy indeed. If so, let your data scientists know, and have them send you a new model.

Done. Welcome to the world of GMLP!